Regurgitating Words - ChatGPT

We live in a world were information is everything. What happens when you are provided information whose source or veracity cannot be verified?

The news over the past couple of months has been filled with hyperbole around ChatGPT.

Google is going to lose all of its business to ChatGPT.

People are going to lose their jobs to ChatGPT.

Professionals are not going to be needed because of ChatGPT.

You know when the pandemic lockdown started, they had called for the demise of so many industries. Hell, I had myself prognosticated on the same. None of it panned out. Retail is back to pre-pandemic levels. Commercial real estate continues to have healthy occupancy.

Let us dive into what ChatGPT really is and what it can and cannot do.

Even before we start, let me tell you, it cannot replace Google. Yet.

In 1950, Alan Turing, the man who won the Second World War by breaking the Enigma machine and also the man who the good people of England murdered for being gay, proposed the Turing Test. The test was meant to identify if a machine can pass for a human.

Turing made people realise how far a computer could go.

At the time, work on the nascent field of Natural Language Processing had started and by the 1960s models existed that could translate languages. Alas, they did not have access to the computing power of cloud computing and the ability to infinitely scale it.

There are many ways to process language. When a child learns a language, he/she is listening to words and associating meaning to each word. Then we humans learn to create strings of words which convey what we are thinking. This requires actual thought and also the ability to understand how the semantic structure works. To understand this we need to be able to understand how the brain works. We know laughably little about what actually goes on in that 1 Kg of tissue in between our ears.

Since we know nothing about it, scientists have been trying to devise ways in which an algorithm could learn a language. One of the methods is called the Large Language Model (LLM). A large language model basically is the equivalent of reading 10,000 books and creating a probability list for what word might follow one. It is brute force learning.

When constructing a word, if the first letter is ‘Q’, then there is a 97% chance that the next letter is ‘u’.

If the first word is ‘This’ there is probably a 25% chance that the next word is ‘is’.

An LLM computes these probabilities and predicts the next word.

Now LLMs have been around for decades now. They just have not been as large. In the old Nokia phones there used to be the T9 dictionary that was used to figure out the next letter in a word. This was at a time when we had only 10 keys on the keypad and had to write text.

With smartphones, we have had predictive text available to us for over a decade now. If you type a word, it suggests 3 words that could follow it. These algorithms normally used some amount of probability distribution but also your texting habits to determine the next word.

OpenAI founded as a not-for-profit, started building an algorithm they called the Generative pre-trained Transformer (GPT).

Generative pre-trained transformers (GPT) are a family of language models generally trained on a large corpus of text data to generate human-like text. They are built using several blocks of the transformer architecture. They can be fine-tuned for various natural language processing tasks such as text generation, language translation, and text classification. The "pre-training" in its name refers to the initial training process on a large text corpus where the model learns to predict the next word in a passage, which provides a solid foundation for the model to perform well on downstream tasks with limited amounts of task-specific data.

Source: Wikipedia

When they started with GPT1, they supplied the algorithm with 7000 books and based on that predictions were made. By GPT3, they were training the algorithm on pretty much everything on the web - about 175 Billion words.

Now the probability prediction uses context as well and can spit out different words based on what was being spoken about.

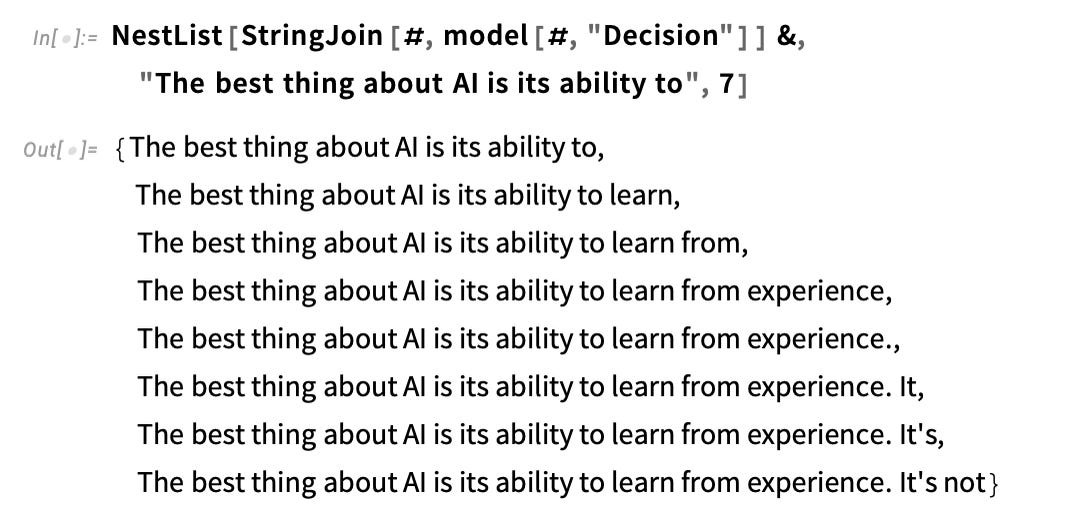

Source: Wolfram

In the example above you can see how the words are predicted one after the other. The initial string “The best thing about AI is its ability to” is provided. This prediction is based on probability distribution in various types of text.

GPT is a word regurgitated. It is incapable of thought or analysis or even objectivity. If you need a 500-word blog which will place 3 keywords 17 times within it, you can count on GPT to deliver it. If you want to make investment decisions, you would be wise to steer away.

With ChatGPT, OpenAI has created a GPT that you and I can interact with directly. In other words, instead of the NestList query typed in the example above, we just need to ask what we need as an English language question.

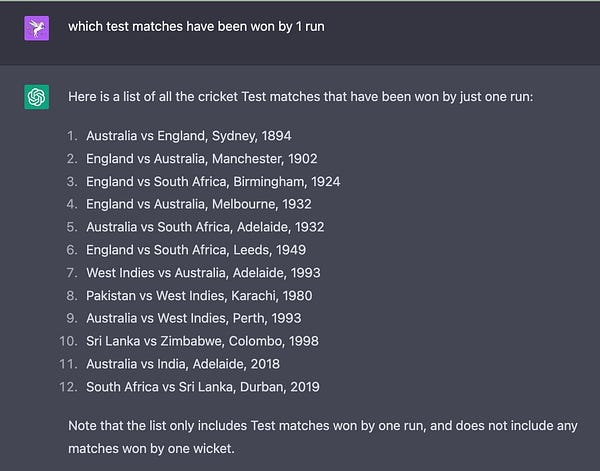

Source: ChatGPT

This has led many people to jump to conclusions and call for the early funeral of Google. They think ChatGPT will replace Search. When you can get a specific answer instead of 100 links, are you not better off?

But here is the thing, ChatGPT does not know right from wrong. Its only capability is in predicting the next word. In the example above the words are being predicted on the basis of hundreds of articles it has read about Niagara Falls from Wikipedia to Quora.

Ask it something a little more esoteric, something that is not discussed heavily on the web and it all starts coming apart.

If you are senior enough to have had to interview people; you would have come across those who can confidently bullshit you into thinking they know. The moment you hire them you regret it. That hire is ChatGPT.

ChatGPT is like that boy in your class who knows where he can copy all the answers from. It has scoured the entire web and created a list of probabilities of which word would follow which, given a context, but it knows not what it is actually saying.

Here are the things that ChatGPT will not and cannot do:

- Find things that are likely to be relevant to the question you are asking. It can only predict what text block is the best fit based on probabilities. It is not confirming this from other sources.

- It cannot verify facts. Not like Google can! But it offers you 10 alternatives for you to look at and make your own judgement. ChatGPT will just offer you gospel truths.

- Cannot come up with original thought, it has read a lot and can just repeat what it has found on the web already. It is incapable of coming up with anything original.

ChatGPT functions a little like taking a photocopy of a photocopy. Over time, it looks nothing like the original but that does not mean that something original has been created. It is just a distortion of the original. And there is a possibility that that distortion is also inaccurate.

ChatGPT is a great tool to play with but is nowhere close to replacing google - for me. And that is where the problem begins

What’s the issue?

Most humans are idiots.

The human propensity to trust anything that they see a computer dish out has risen astronomically over the last 10 years. We watch YouTube videos and get enraged without even checking the source. We read WhatsApp forwards and distribute the hell out of it without even knowing where it started or who had a vested interest in putting it out.

Imagine assuming anything that ChatGPT spouts are gospel truth. Then spreading and amplifying what it has managed to put out there. Worse, there is no way of finding the source for the stuff that ChatGPT spouts. I doubt even ChatGPT knows!

The whole problem with the world is that fools and fanatics are always so certain of themselves, and wiser people so full of doubts.

~ Bertrand Russell

ChatGPT falls in the former category.

You ask ChatGPT anything and it is so certain of itself. Given how lazy we have become we will not take the slightest effort to verify the things that it is saying. We will copy-paste what comes out of ChatGPT as blogs on our sites and the algorithm will train on more of the same bias. Why do I say this?

There were over 200 e-books in Amazon’s Kindle store as of mid-February listing ChatGPT as an author or co-author, including "How to Write and Create Content Using ChatGPT," "The Power of Homework" and poetry collection "Echoes of the Universe." And the number is rising daily. There is even a new sub-genre on Amazon: Books about using ChatGPT, written entirely by ChatGPT.

Source: Reuters

AI will not harm us. Our laziness definitely will.

Very pertinent

Oh....a bovine you mean....like a cow.