Content Paradigm

LLMs are merely a content paradigm which is fast turning into mere commodities. In 15 years they might be spoken of in the same terms as databases are spoken of today.

Computing has grown through the years in various paradigms driven by the evolution of our needs. It started with the raw need to compute. During the Second World War, two things that required incredible computation were Ballistics trajectory and decryption. The US and UK defence departments used to hire rooms full of women to calculate ballistics trajectory. They wanted this computation to take place faster. Alan Turing was tasked with breaking the Enigma.

What was needed was the ability to calculate faster and faster. After the invention of the transistor in 1948, calculations could be done at the speed of light. This dovetailed into a race to build supercomputers. This was the first computing paradigm - Computation for the sake of it.

In the 1960s and 70s, the next great leap arrived in the form of the database. Everything was turned into a database and this gave us companies like Oracle and SAP. There was so much compute available, there was a need to organise the data such that proper analysis could be performed on it.

Then came the networking paradigm, first to build at-scale networks within organisations which saw companies like CISCO rise. Then in the 90s, a protocol developed at CERN called the World Wide Web turned a DARPA project into the Internet.

The first 10 years of this millennium were spent combining the power of the database and the network to deliver connectivity and pattern identification at a scale that was never seen before. This combination made social media possible. Some entrepreneurs slapped a real-world layer on top of this to invent the sharing economy. Companies like Uber, Airbnb, Doordash, Zomato, etc combine databases and networks to interface with real-world operations to deliver services to us.

As you recognise patterns, obviously some intelligence is delivered.

In the late 90s, a couple of researchers in Sanford decided to download the entire internet to look for patterns; they created Google. Google undertook pattern recognition in a manner that no previous search engine had. But they did not go around calling their product AI.

Funded by Elon Musk a bunch of researchers in 2016 started building algorithms to look at patterns in word structure across the internet based on their context. OpenAI is nothing but an extension of the same tools to recognise patterns in content. What we call AI today is nothing but a content paradigm.

The answers that “AI” delivers are what you would get if you asked a 16-year-old to browse the internet and come up with an answer to a certain question. Only the 16-year-old would do it on a banana but the “AI” needs three nuclear reactors to deliver the answer.

Just spare a moment to understand the thinking behind this.

If it takes that much energy to replace a human being, they are not going to save money by switching to AI. The only thing they are going to reduce is managerial bandwidth.

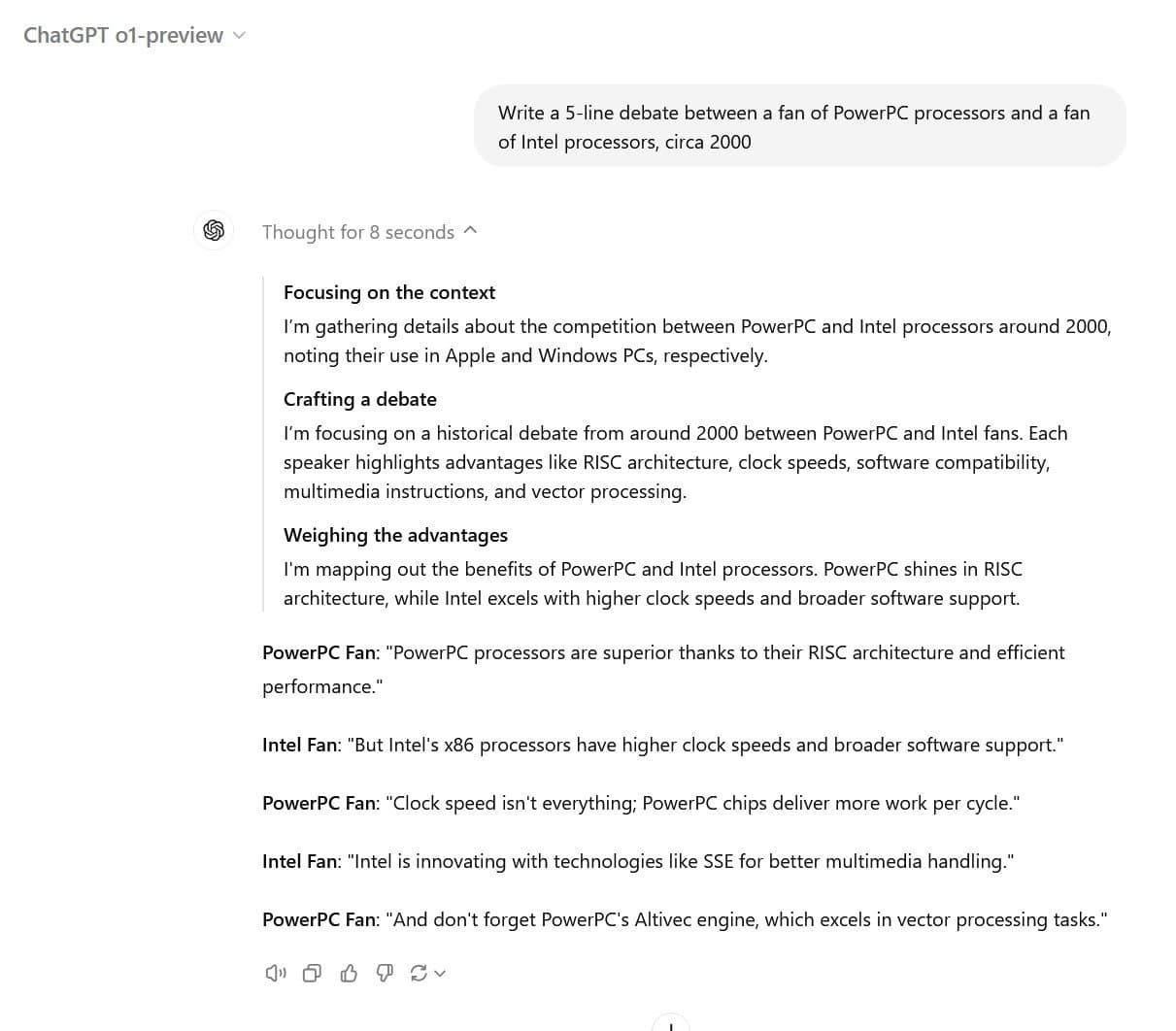

While Sam Altman is borrowing a page from Elon Musk and promising AI that is capable of thinking, at the same time…

OpenAI truly does not want you to know what its latest AI model is "thinking." Since the company launched its "Strawberry" AI model family last week, touting so-called reasoning abilities with o1-preview and o1-mini, OpenAI has been sending out warning emails and threats of bans to any user who tries to probe how the model works.

[…]

Along the way, OpenAI is watching through the ChatGPT interface, and the company is reportedly coming down hard on any attempts to probe o1's reasoning, even among the merely curious.

Source: ArsTechnica

As Iron Man says in Avengers - An intelligence organisation that fears intelligence. Historically, not awesome!

The reason is not competitive advantage or anything remotely like that. Given that they are based out of the US, it is lawsuits. Once the question of what it is thinking is answered, the next question is where did it gather its facts? Also, it makes it difficult for the company to claim that this entire thing is such a black box.

When companies have used algorithms to dish out credit ratings that are obviously racist, they have been able to safeguard themselves by claiming that AI is a black box and there is no way for them to know how it arrived at its conclusion.

Such biases might surface in certain cases when LLMs are questioned and this can turn into a PR nightmare.

The most interesting part is that research is starting to show that most of these models are incapable of “reasoning”.

For a while now, companies like OpenAI and Google have been touting advanced "reasoning" capabilities as the next big step in their latest artificial intelligence models. Now, though, a new study from six Apple engineers shows that the mathematical "reasoning" displayed by advanced large language models can be extremely brittle and unreliable in the face of seemingly trivial changes to common benchmark problems.

[…]

GSM8K's standardized set of over 8,000 grade-school level mathematical word problems, which is often used as a benchmark for modern LLMs' complex reasoning capabilities. They then take the novel approach of modifying a portion of that testing set to dynamically replace certain names and numbers with new values—so a question about Sophie getting 31 building blocks for her nephew in GSM8K could become a question about Bill getting 19 building blocks for his brother in the new GSM-Symbolic evaluation.

[…]

Adding in these red herrings led to what the researchers termed "catastrophic performance drops" in accuracy compared to GSM8K, ranging from 17.5 percent to a whopping 65.7 percent, depending on the model tested. These massive drops in accuracy highlight the inherent limits in using simple "pattern matching" to "convert statements to operations without truly understanding their meaning," the researchers write.

Source: ArsTechnica

Reasoning does not alter when the problem alters. Safe to say what we are referring to AI or GenAI, are smooth regurgitation machines.

What is being called AI is merely a content paradigm in a long list of paradigms that have come and gone.

Also, I have yet to come across people who are entirely comfortable with outsourcing their content to such tools.

IBM created the first database to help American Airlines build Sabre which became the industry de-facto for airline booking. Other databases were created and soon database was a commodity and there were different ones available based on the nature of the requirement.

LLMs model will also be commodified in the same way. There will be several models from several companies meant to address different purposes.

This too shall pass! In 15 years we will look back at this period and think what was all the furore about?

This got me thinking! This is a perspective that I have not heard till date.

Would WE be allowed to ask any questions 15 years from now?